Overview

This summer I worked as a Research Intern at Baidu USA, responsible for the development and research of the Apollo autonomous driving platform.

The rest of this post is organized as follows.

- I firstly introduce what Apollo and its architecture is in a general way.

- Secondly, my duties and what I have done are described with details.

- Finally, I end this post by discussing my internship experience, and how it relates to my program at Columbia University, my career goals and my aspirations.

Introduction to Apollo

1.1 Overview of Apollo

Apollo is a high performance, flexible architecture which accelerates the development, testing, and deployment of Autonomous Vehicles. It is open source (GitHub repository) and under Apache-2.0 License that everyone in the community could use it and contribute to it. It is an open platform whose primary purpose is to become a vibrant autonomous driving ecosystem by providing a comprehensive, safe, secure, and reliable solution that supports all major features and functions of an autonomous vehicle. It has an ambition is no less than to revolutionize the auto and transportation industries, and there will be challenges of unprecedented scale and complexity along the way.

1.2 Architecture of Apollo

I copy the architecture figure from Apollo’s website for your reference as follows:

From my understanding, the skeleton of Apollo is like a publish-subscribe system consisting of a lot of modules (called Cyber RT node in Apollo), each of which act like a ROS node but communicate with Protocol Buffer and built by Bazel. For example, there is a Cyber node for camera, which reads all photos taken by the camera at a certain frequency and publish all the data out. Hence, those nodes who would like to use it, such as some nodes in the prediction module, will subscribe from it.

In essence, Apollo is the freedom from wasting time and resources re-implementing fundamental components and allows each member of the ecosystem to focus on their specific areas of expertise, which will dramatically increase the speed of innovation.

Apollo’s comprehensive, modular solution allows anyone to use as much or as little of the existing source code and data. Open source code portions can be modified and open capability components accessible through an API can be replaced with proprietary implementations. All this can then be contributed back to Apollo, redistributed, and commercialized.

Critical considerations such as ensuring reliability, handling failover, and guaranteeing security that would pose a challenge to most development efforts will be readily available components. Everyone can benefit from Apollo’s capabilities to improve individual competitive advantage.

2. My duty, work and accomplishment

In my offer letter, my duties were written as

Work with world-class talented group of software engineers and scientists to design, implement and integrate cutting edge autonomous driving technologies on Apollo platform.

Practice data-driven methodology in developing autonomous driving software and enjoy playing with data sets at large scale.

Utilize what you learn at college to build, testify and optimize the overall onboard computation system to meet real-time needs.

Specifically, my work this summer is building a complete end-to-end audio module for emergency vehicle detection.

2.1 What for

It is required by autonomous driving license of California that autonomous vehicles must actively avoid emergency vehicles. In order to accomplish this, the company decides to develop a mechanism consisting of two main module – emergency audio detection module and emergency video detection module. I am responsible for the emergency audio module, whose objective is to collection sound and detect emergency vehicles such as ambulances, fire engines, and police cars based on their siren sounds. Besides, if there is any emergency vehicle, their direction and approach/departure state will be estimated.

2.2 System Design and my work

A complete system design including hardware selection, driver I/O, emergency detection modules offline/online inference part.

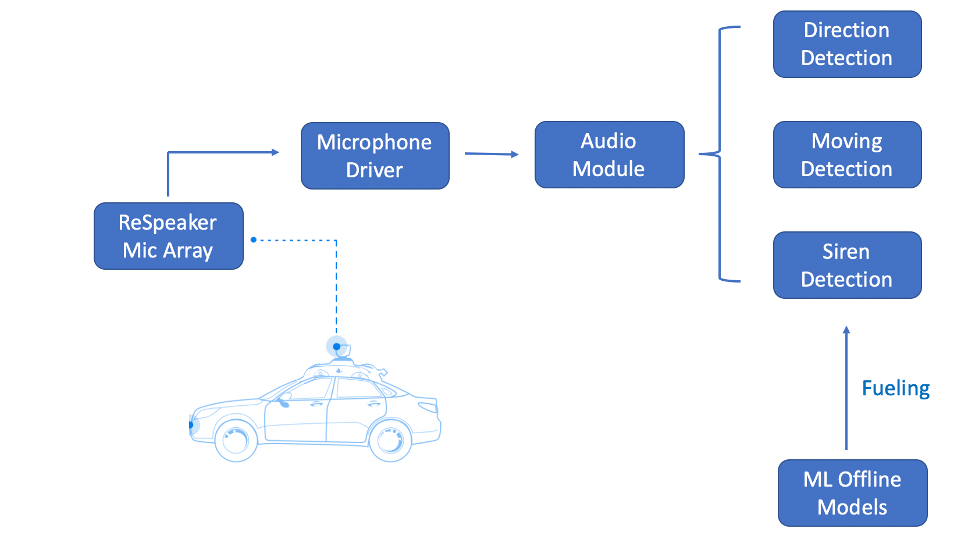

The following graph depicts this in a certain degree. In Apollo, different modules act like a Cyber node and different nodes communicate with each other by Protocol Buffer.

2.2.1 ReSpeaker Mic Array

We select ReSpeaker Mic Array as our hardware, which consists of six channels – four of which are raw signal from the 4 microphones, one is processed audio for ASR (Acoustic Speech Recognition) and the remaining one for playback. The multiple microphones at different positions make it possible for us to apply some algorithm to estimate direction of sound source and approach/departure state and also make it robust for siren detection.

2.2.2 Microphone Driver

This is the driver for ReSpeaker Mic Array written by C++, which utilizes PortAudio to extract sound from the hardware at a certain frequency. It supports different configuration like sample rate, sample width, chunk size, etc. This is done by dynamically loading Protocol Buffer from configuration file.

- For this driver, I firstly wrote a python version using PyAudio library to enable fast data collection. Then I deep dived into the underlying C++ library used by PyAudio library, rewrote the whole module, which greatly improves the efficiency including startup time and the following repeatedly processing time for each chunk.

- Microphone driver is sort of my first commit at Apollo, the source code of the C++ version can be found here.

2.2.3 Audio Module

This is the online inference part, consisting of three submodules – direction detection, moving detection and siren detection. The first two is done by rule-based algorithm directedly applied to the raw data after some signal processing techniques. The last one – siren direction is fueled by the offline inference part. We firstly train our siren-detection model offline privately, and only publish the skeleton code without the trained model. The code for this part can also be found here.

- My work in this part mainly focus on the data processing and the direction detection part. Specially, I apply the GCC-PHAT algorithm (consists of different signal processing techniques like FFT) to estimate the direction utilizing the four microphones at four corners. This is done by using PyTorch’s C++ library, the LibTorch.

2.2.4 ML Offline Models

The objective of this part is to train model that can decide whether there is siren (maybe different kinds of siren) within a certain period of time. It is also consisted of different techniques like different algorithm in signal processing, machine learning and deep learning. It should consider a lot of disturbing factors such as the environment (noise level, wind, etc.), rules in physics like the Doppler’s effect, and so on and so forth. Since this part is not published and under NDA, I will just discuss at a high level.

3. Experience Contributes to My Career

3.1 Overall experience

Due to Covid19 and other unexpected circumstances — (Previous offer revoked & then Baidu requires SSN but SSA closed…) — I got the offer of this internship relatively late comparing to other friends of mine. I got onboard on 6th July. Fortunately, I have some really helpful co-workers and my experienced boss. They did help me a lot.

3.2 Contribution to my career

The overall internship experience is great. I have experienced the whole stage of the development of a module in an industrial product, including different stages as planning, designing, implementing, testing, which I have never had before. I think this does give me a preview of what my future life and future work will be, this is instructive.

3.3 Relation with my track

Besides, this internship is strongly related to my program at Columbia University. Last semester I took the Design Using C++ given by Bjarne Stroustrup. I have coded a lot in C++ since I was a freshman in my undergraduate school. However, I have not had a chance to write C++ in a real industrial product. This internship kind of fulfills this aspiration. Another thing is that it does relate to my track in the department. During this summer, we explore different kinds of Machine Learning to detect siren sound and possibly other detections.